Introduction

Language processing in the human brain is a complex, highly orchestrated function that engages multiple interconnected regions. Decades of research – from classic lesion studies to modern neuroimaging – have converged on the view that language relies on a distributed network of brain areas in the cerebral cortex, predominantly in the left hemispherefrontiersin.orgpubmed.ncbi.nlm.nih.gov. This network enables us to comprehend and produce spoken and written language, transforming sounds or visual symbols into meaningful communication. In recent years (approximately 2019–2024), advanced techniques like functional MRI (fMRI), electrocorticography (ECoG), and diffusion tractography have refined our understanding of where and how language is processed in the brain. Below, we summarize current neuroscientific insights into the key brain regions for language, the lateralization of language function, the roles of different lobes in spoken versus written language, and the mechanisms by which the brain processes speech and text. We also highlight findings from human studies (including neuroimaging and aphasia cases) and note comparative insights from primate brains where relevant, to illustrate the evolutionary context of our language network.

Core Brain Regions and Language Networks

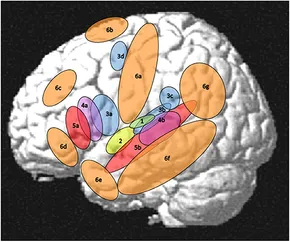

Key language-related regions in the left hemisphere. Colored regions (green/red/purple) indicate the left perisylvian “core” language network, including auditory cortex, inferior frontal gyrus, superior temporal gyrus, and temporoparietal areas. Orange regions mark additional “extended” areas that contribute to language processing (e.g. motor, prefrontal, and temporal pole regions)

At the heart of language processing is a left-dominant perisylvian network – a set of areas around the Sylvian fissure in the left frontal, temporal, and parietal lobesfrontiersin.org. This core language network includes the left inferior frontal gyrus (Broca’s area and adjacent premotor regions), the left superior temporal gyrus (Wernicke’s area and auditory cortex), and a temporoparietal interface in the left inferior parietal regionfrontiersin.org. These regions are heavily interconnected and collectively specialized for language: they activate robustly during language comprehension and production while showing little response to non-language taskspubmed.ncbi.nlm.nih.gov. Historically, Broca’s and Wernicke’s areas were identified as critical hubs (for speech production and comprehension, respectively) from lesion studies, and those findings remain fundamentally supportedpmc.ncbi.nlm.nih.govpmc.ncbi.nlm.nih.gov. However, contemporary research has painted a more nuanced picture in which language processing is distributed across a network of frontal-temporal-parietal areas rather than a single centerfrontiersin.orgfrontiersin.org. For example, neuroimaging shows that even simple language tasks can elicit activity in widespread areas of the left hemisphere (and sometimes the right hemisphere as well), all of which work together to process different aspects of languagefrontiersin.org. Importantly, the core language regions serve as “convergence zones” that integrate information from many other specialized areas during real-life language usefrontiersin.orgfrontiersin.org. In summary, the human brain’s language system is anchored in left inferior-frontal and superior-temporal cortices with support from adjacent premotor and parietal areas – a dedicated network that enables phonology, syntax, and basic semantics

Beyond this core perisylvian circuit, additional brain regions contribute to language processing depending on context and task demands. Recent reviews emphasize that natural language use recruits multiple networks – for memory, attention, social cognition, sensorimotor integration, etc. – in concert with the core language networkfrontiersin.orgfrontiersin.org. For instance, understanding a sentence in context may engage frontal executive regions (for attention and cognitive control) and midline or temporal lobe structures involved in memory and social inferencefrontiersin.org. In essence, language is not an isolated module – it interfaces with motor areas (for speaking and gesturing), auditory and visual areas (for perceiving speech and text), emotional centers (to process tone and affect), and so onfrontiersin.org. This expanded view is supported by fMRI studies showing “wide-spread activation patterns beyond [the core language] network” during complex language tasksfrontiersin.orgfrontiersin.org. Thus, current models describe a hierarchy of language processing: a left-lateralized core network handling the formal linguistic computations (sounds, words, syntax)frontiersin.org, supplemented by a web of other regions that bring in meaning from memory, context, and sensory-motor experience to enrich communicationfrontiersin.org. This multiple-network perspective aligns with the idea that using language “is more than single-word processing” and requires integration of many cognitive functionsresearchgate.net. It is also consistent with clinical observations that damage to classical language areas causes profound aphasia, yet real-world language deficits can also arise from injuries to supporting regions (e.g. right hemisphere or subcortical damage affecting prosody or fluency). In summary, neuroscience today views language processing as emerging from distributed, dynamic brain networks, with a prominent left perisylvian core and many interacting partners across both hemispheres.

Hemispheric Lateralization of Language

One of the clearest principles of language organization is its hemispheric lateralization. In the vast majority of individuals, language functions are dominant in the left hemisphere. Approximately 95% of right-handed people show left-hemisphere dominance for language processingpmc.ncbi.nlm.nih.gov, as confirmed by fMRI and classical Wada tests. (Left-handed people show more variability, but even in left-handers about 70–80% still have left-dominant languagepmc.ncbi.nlm.nih.gov.) This left-sided dominance was first noted by Broca and Wernicke in the 19th century and has been consistently supported by modern studiespmc.ncbi.nlm.nih.govpmc.ncbi.nlm.nih.gov. For example, strokes or lesions in the left frontal or temporal lobe often cause severe aphasia (language impairment), whereas similar right hemisphere lesions usually do not produce the classic aphasic syndromesfrontiersin.org. Left-hemisphere superiority spans various aspects of language: decoding speech sounds, understanding words and sentences, and generating fluent speech are all strongly left-lateralized processes in most peoplepmc.ncbi.nlm.nih.govpmc.ncbi.nlm.nih.gov. Neuroimaging likewise shows greater left than right activation in core language regions during tasks like listening to speech, reading, or speakingpmc.ncbi.nlm.nih.gov. In short, the left cerebral hemisphere is the primary “language hemisphere” for the majority of humans.

That said, the right hemisphere is by no means silent during language processing – it plays important supporting roles. Brain imaging reveals that while left perisylvian areas do the heavy lifting for linguistic coding (syntax, phonology, literal semantics), the right hemisphere homologues of these areas often activate in parallel, especially for certain language tasksfrontiersin.orgfrontiersin.org. The right hemisphere is particularly involved in processing prosody, intonation, and the emotional tone of speech. For example, studies of affective prosody (the emotional coloring of spoken language) show right-lateralized activity in the superior temporal sulcus and inferior frontal gyrus when subjects interpret emotional tones of voicefrontiersin.org. The right temporal lobe has been linked to recognizing speaker intonation, sarcasm, humor, and other pragmatic aspects of communication that go beyond literal word meaningfrontiersin.orgfrontiersin.org. Additionally, the right hemisphere appears to contribute to discourse-level processing – maintaining story context and integrating information across sentences – as well as to certain memory and attention functions that aid language comprehensionfrontiersin.orgfrontiersin.org. In patients, damage to right hemisphere language homologues can lead to deficits in understanding jokes, emotion in speech, or using context appropriately, even if basic word comprehension is intact. Thus, while core language abilities (grammar, word decoding) are left-dominant, the right hemisphere provides complementary functions: it processes the “music” of language (prosody, rhythm), aids in high-level interpretation, and can partly compensate if the left side is injuredfrontiersin.orgfrontiersin.org. This bilateral contribution is also reflected in the auditory system – the two hemispheres encode speech sounds over different timescales (left-preferring brief phonetic chunks, right-preferring longer syllabic patterns) to jointly analyze spoken languagefrontiersin.org. In summary, language processing is strongly lateralized to the left hemisphere, but it engages both hemispheres in a complementary fashion. The left hemisphere houses specialized circuits for linguistic coding and production, while the right hemisphere handles prosodic, emotional, and integrative aspects of communication, making language a truly whole-brain endeavor in practice.

Contributions of Different Lobes to Language Processing

Language processing engages multiple lobes of the brain in distinct but interacting ways. Broadly, the frontal and temporal lobes form the core of language networks (for producing and understanding language), the parietal lobe provides an interface for integrating modalities and supporting phonological working memory, and the occipital lobe contributes visual processing for reading. Below is a breakdown of the major lobes/regions involved in both spoken and written language:

-

Frontal Lobe (Inferior Frontal Gyrus and Motor Regions): The left frontal lobe – in particular the left inferior frontal gyrus (IFG) – is crucial for language production and the assembly of complex syntax. Broca’s area, located in the posterior IFG, has long been associated with speech articulation and grammatical sentence constructionfrontiersin.org. This region becomes active when we speak or form words, whether aloud or in our internal speech, and damage here causes non-fluent aphasia (halting speech with impaired grammar)pmc.ncbi.nlm.nih.gov. Beyond speech output, the left IFG also engages in processing sentence structure and resolving ambiguities, essentially acting as a “syntax engine” in the brainfrontiersin.orgfrontiersin.org. The frontal lobe contribution isn’t only Broca’s area: adjacent premotor cortex and supplementary motor areas help plan and execute the movements of speech (tongue, lips, larynx)frontiersin.orgfrontiersin.org. In summary, the frontal lobe provides the motor and syntactic backbone of language – formulating what we want to say and coordinating the muscle commands to say it. (Notably, research also shows that Broca’s region contains both language-specific circuits and nearby domain-general executive circuitsjournals.sagepub.com, highlighting the close interplay between linguistic processing and general cognitive control in the frontal lobe.)

-

Temporal Lobe (Superior and Middle Temporal Gyrus): The temporal lobe of the left hemisphere is the primary hub for language comprehension. The superior temporal gyrus (STG), which includes primary auditory cortex and Wernicke’s area in the posterior section, is responsible for perceiving and interpreting speech soundspmc.ncbi.nlm.nih.gov. When we hear spoken words, the sound signals arrive at the STG (Heschl’s gyrus for basic auditory processing, and surrounding auditory association cortex for speech-specific analysis). Neurons in mid-to-posterior STG decode the sequence of sounds into phonetic units – this area contains specialized populations that respond to distinct phonemic features (the building blocks of words)pmc.ncbi.nlm.nih.gov. Damage to the left STG/Wernicke’s area produces Wernicke’s aphasia, a condition in which patients can hear words but cannot understand their meaning, underscoring the STG’s role as a “speech comprehension” areapmc.ncbi.nlm.nih.gov. The middle and inferior temporal gyri of the left temporal lobe are more involved in lexical-semantic processing – i.e. linking word forms to their meaningsfrontiersin.org. These regions serve as a repository for word meanings and concepts; when you hear or read a word, the stored semantic information in the middle temporal lobe is activated to give that word meaning. The anterior temporal pole may also act as an integration hub for combining meanings (useful in sentence comprehension and semantic memory)frontiersin.org. In short, the left temporal lobe (upper part) transforms sounds into recognizable words, and (middle/lower parts) attaches meaning to those wordsfrontiersin.org. In spoken language and in reading, these temporal areas are indispensable for understanding vocabulary and content.

-

Parietal Lobe (Inferior Parietal Cortex – Angular and Supramarginal Gyrus): The inferior parietal lobe, at the junction of temporal and parietal regions, serves as a multimodal integration zone for language. The left angular gyrus and supramarginal gyrus are often activated during tasks that require mapping between different forms of language information – for example, converting written letters to sounds (in reading), or integrating word meanings with spatial/visual contexts. This region is considered a “temporoparietal interface” in the language networkfrontiersin.org. One key function of the inferior parietal lobe is supporting the phonological loop in working memory – when you hear a sentence or a phone number and rehearse it in your mind, or when you prepare to speak a phrase, these parietal areas help maintain and manipulate the sound-based information. They connect with frontal speech areas to facilitate repetition and phonological processingfrontiersin.org. In fact, a specific area at the Sylvian-parietal boundary (sometimes called area Spt) is involved in linking auditory representations of words to the motor plans for speaking themfrontiersin.org. Lesions in the left inferior parietal region can lead to conduction aphasia, where patients have trouble repeating words despite good comprehension, thought to result from a disconnection between temporal (perceptual) and frontal (motor) language areas. During reading, the angular gyrus is also important for mapping graphemes to phonemes – i.e. translating written text into the corresponding sounds and meaningsnature.com. In summary, the parietal lobe provides a bridge between perception and action in language: it is involved in cross-modal integration (sound-letter mapping, linking words with sensory experiences) and in verbal working memory that underlies skills like repeating, reading aloud, or constructing discourse.

-

Occipital Lobe (Visual Cortex and Visual Word Form Area): The occipital lobe is the visual processing center, and while it is not classically part of the “language network,” it becomes crucial for written language. Reading starts with the visual cortex seeing letters and words. In literate adults, a specialized region in the left occipito-temporal sulcus, at the interface of occipital and temporal lobes, called the Visual Word Form Area (VWFA), responds selectively to written words and letter stringspmc.ncbi.nlm.nih.gov. This region, in the left ventral occipitotemporal cortex (often in the fusiform gyrus), is tuned through experience to recognize orthographic patterns (familiar letter combinations) rapidly and almost automatically. Reading depends on the VWFA – if this area is damaged (as in some strokes or in cases of pure alexia), individuals can lose the ability to recognize words by sightpmc.ncbi.nlm.nih.gov. In neuroimaging, the VWFA reliably lights up when a person reads text, distinguishing real words from unfamiliar strings. Interestingly, the VWFA does not work in isolation; it quickly communicates with language areas in the frontal and temporal lobes during readingpmc.ncbi.nlm.nih.gov. For example, when you read an active sentence, the VWFA feeds visual word information to the temporal lobe to access meaning and to the frontal areas to sub-vocalize or plan speech. Studies show that functional connectivity between the VWFA and Broca’s area increases during word reading tasks, indicating that the visual word form system is integrated into the broader language networkpmc.ncbi.nlm.nih.gov. Aside from the VWFA, the occipital lobe’s primary visual areas are of course involved in perceiving written text, and the right occipital cortex can assist (e.g. in peripheral vision or if the left is impaired). But in general, it is the left occipitotemporal cortex that has been highly sculpted by literacy to act as the entry point for printed language. In summary, the occipital lobe (especially the left fusiform area) provides the visual pathway into the language system, allowing us to read and visually recognize words, which are then processed for meaning by the same temporal and frontal language circuits used in spoken language.

Neural Processing of Spoken Language (Auditory Pathway)

How does the brain process a spoken word from the moment it is heard to the point of understanding? Neuroscientific research indicates a staged process along the auditory pathway, with parallel streams for decoding sound and attaching meaning. When a spoken word enters the ear, it is first encoded by the primary auditory cortex (located in Heschl’s gyrus of the superior temporal lobe) – this is the brain’s initial receiver for all sounds. The primary auditory cortex analyzes basic acoustic features (frequencies, intensity, timing) and then passes the information to surrounding auditory association areas in the superior temporal gyrus. These surrounding areas, especially in the mid to posterior STG of the left hemisphere, act as a dedicated speech processing center. Research by Mesgarani, Chang, and others using ECoG (electrocorticography) has shown that populations of neurons in the posterior STG respond selectively to distinct phonetic features in speech (such as specific consonant or vowel sounds), effectively encoding speech sounds into phonological unitspmc.ncbi.nlm.nih.gov. Thus, the mid-posterior STG can be seen as an “auditory word form” area – a perceptual region that recognizes spoken words or at least their sound patternsfrontiersin.org. In models of language, this corresponds to the early ventral stream for speech recognition, which maps complex acoustic signals onto stored representations of wordsfrontiersin.orgfrontiersin.org.

As the sounds are identified as familiar word forms, the brain rapidly engages lexical and semantic circuits to interpret the word’s meaning. The neural activity spreads from STG to middle temporal cortex and inferior temporal regions (and likely the anterior temporal lobe), where the word’s semantic information is retrievedfrontiersin.org. For example, upon hearing the word “apple,” the acoustic pattern is matched to the concept of “apple” in the middle temporal gyrus and related semantic memory areas, bringing to mind an apple’s attributes. This is supported by neuroimaging evidence that lexical-semantic areas in the temporal lobe activate after phonological areas during speech comprehensionfrontiersin.org. Meanwhile, frontal language areas (like Broca’s region) may also start to engage, especially if the word is part of a sentence requiring syntactic parsing or if a response is being prepared. The overall process is extremely fast: within ~200 milliseconds of hearing a word, the left STG and temporal lobe show robust activation corresponding to phonemic analysis and word identificationpmc.ncbi.nlm.nih.gov, and shortly thereafter (200–500 ms) inferior frontal regions engage to integrate the word into a grammatical and contextual framework (as seen in EEG/MEG signals like the N400 for semantic processing or P600 for syntactic integration). Thus, understanding a spoken word is a temporal cascade from auditory perception to phonological decoding to lexical-semantic access.

Crucially, there are two interacting pathways for processing spoken language: a ventral stream that supports comprehension (mapping sounds to meaning) and a dorsal stream that supports repetition and articulation (mapping sounds to motor output). In the ventral stream (largely left temporal lobe), the focus is on recognizing the speech input and retrieving meaningfrontiersin.org. In parallel, the dorsal stream connects the auditory representation of the word to the frontal lobe’s speech motor systemfrontiersin.org. This dorsal pathway involves the left inferior parietal region and premotor cortex: essentially, it is a circuit that links Wernicke’s area to Broca’s area via the arcuate fasciculus white matter tract. It comes into play especially when we need to repeat a word or imitate a heard sentence, converting the heard phonological code into a sequence of articulation. Even during passive listening, the brain’s dorsal stream might do some “motor echoing” – evidence from ECoG suggests that motor cortex can show slight activation to heard speech, possibly reflecting simulations of the movements needed to produce those sounds. The dual-stream model (Hickok & Poeppel) thus emphasizes that speech perception and production are intimately connected: the ventral stream lets us understand, and the dorsal stream lets us verbally respondfrontiersin.orgfrontiersin.org.

As a concrete example, consider hearing the sentence “The cat is on the mat.” First, primary auditory cortex (A1) responds to the sound waves, then the left STG parses the sequence into phonemes and words (“the”, “cat”, “is”, etc.). Next, the meaning of each word and the overall semantic scenario are processed in the middle temporal lobe (recognizing “cat” as an animal, “mat” as an object, etc., and understanding the spatial relation). Simultaneously, Broca’s area in the frontal lobe may start organizing the grammar (identifying “the cat” as subject, “on the mat” as a locative phrase). If you were to repeat the sentence or answer a question about it, the dorsal stream (via parietal cortex) would relay the phonological information to your speech motor planning areas to formulate a response. During this whole process, the right hemisphere also contributes by analyzing prosody (was the sentence spoken with a certain emphasis or emotion?) and by helping to build a mental model of the overall context. By the end of this process, within a second or two, you have a rich understanding of the spoken sentence.

In summary, auditory language processing follows a path from sound reception in the auditory cortex, to speech-specific analysis in the superior temporal gyrus, to widespread activation of meaning in temporal (and frontal) areas, with a parallel motor pathway linking to speech production mechanisms. The superior temporal lobe is a key entry point – a “gateway” that converts sound to language – as evidenced by the profound comprehension deficits when this region is damagedpmc.ncbi.nlm.nih.gov. Downstream, the coordination between temporal and frontal language regions (connected by fiber tracts) allows the brain not only to understand speech but also to generate a timely and appropriate spoken response. This remarkable neural process underlies the effortless way we engage in conversation, turning mere vibrations in the air into ideas and thoughts in the mind.

Neural Processing of Written Language (Reading Pathway)

Reading, while not an evolutionarily ancient ability like spoken language, has been “wired into” our language network through learning and experience. Written language processing begins with the visual system and then converges on the same higher-level language areas used for speech. When you read a word, the information flow in the brain is roughly: eyes → occipital visual cortex → Visual Word Form Area (VWFA) in left occipitotemporal cortex → distributed language network (temporal and frontal regions). Each of these stages has been illuminated by neuroimaging and cognitive studies in recent years.

Firstly, as you fixate on a written word, the primary visual cortex (in the occipital lobe) and surrounding visual areas break down the letter shapes, contrast, and lines. This low-level visual information is then channeled to the left ventral occipitotemporal cortex, where the Visual Word Form Area resides. The VWFA is a region that shows selective activation to written words as opposed to other visual stimulipmc.ncbi.nlm.nih.gov. By about ~150 milliseconds after seeing a word, the VWFA differentiates whether the stimulus is a real word, a pronounceable pseudoword, or a meaningless string of letters, indicating that it is sensitive to orthographic patterns. In essence, the VWFA functions as a gateway for visually presented text, transforming visual letter sequences into an abstract representation that the language system can understand (sometimes described as an “orthographic lexicon”)pmc.ncbi.nlm.nih.gov. This region develops with literacy; in illiterate individuals or young children, it is not yet specialized for words, but as reading is learned, the VWFA becomes finely tuned to the script one is trained on. It’s notable that the VWFA’s selectivity is experience-dependent but also constrained by neural architecture – it consistently occupies a similar location in the left fusiform gyrus across individuals, reflecting how the brain repurposes cortical tissue for reading.

Once the VWFA has identified a string of letters as a familiar word (or at least a possible word), the information is passed to language networks for interpretation. From the occipitotemporal VWFA, there appear to be at least two pathways: a ventral pathway that links to semantic processing regions and a dorsal pathway that links to phonological processing regionsnature.com. The ventral route goes anteriorly into the temporal lobe – for a known word, the VWFA rapidly activates the left anterior/middle temporal cortex to retrieve the word’s meaning (similar to how hearing a word activates those areas)frontiersin.org. This allows for direct recognition of words by sight (often termed “whole-word reading” or orthographic access). The dorsal route involves projecting from occipitotemporal regions to the left inferior parietal cortex (angular gyrus region) and further to the frontal lobe. This pathway is associated with phonological decoding – translating the letters into sounds (grapheme-to-phoneme mapping) and assembling the sounds to figure out the word, which is crucial for reading new or unfamiliar words and is heavily used by beginning readersnature.com. In skilled adult reading of familiar words, the ventral semantic route tends to dominate (we recognize words by sight quickly), but the dorsal pathway still operates for unfamiliar words or in languages with complex spelling-to-sound rules. Notably, neuroimaging studies of dyslexic individuals (who struggle with reading) show reduced activation in the left occipitotemporal (VWFA) and temporoparietal regions, and increased reliance on alternative pathwaysnature.comnature.com. Dyslexic readers often under-utilize the VWFA and instead engage more effortful dorsal pathways (and even right hemisphere regions) to decode text, highlighting how critical the typical VWFA–temporal coupling is for fluent readingnature.com.

As visual word information travels these routes, it eventually converges on the same left frontal language regions that handle speech/language outputpmc.ncbi.nlm.nih.gov. For example, if one is reading silently for comprehension, Broca’s area might show modest activity for syntactic structuring; if one is reading aloud or subvocalizing, the motor speech areas in the frontal lobe become active to articulate or simulate the words. Functional connectivity analyses have explicitly demonstrated that when we engage in a reading task, the VWFA increases its synchronization with Broca’s area and other language-related frontal regionspmc.ncbi.nlm.nih.gov. This finding supports a cooperative network model: the moment you recognize a written word, your brain’s speech and language apparatus is recruited to derive meaning and, if needed, pronunciation. In bilinguals, interestingly, the VWFA can even split or differentiate by writing system (for example, one section responding to English words and an adjacent section to Chinese characters), yet both connect to the shared semantic system – a testament to the plasticity and integration of the reading networksciencedirect.comanthro1.net.

To summarize, reading is the process of translating visual symbols into the language code, and the brain accomplishes this via the occipital and occipitotemporal cortices funneling information into the left perisylvian language network. The occipital lobe’s contribution is in feature detection of text, the occipitotemporal VWFA is in recognizing letter patterns as words, the parietal lobe aids in phonological assembly (especially in learning stages or difficult words), and the temporal and frontal lobes extract meaning and enable pronunciation. Reading and listening ultimately converge on common downstream mechanisms – once words are recognized (whether heard or seen), we access meaning and syntax in a modality-independent fashion. This is why, for instance, a person with a left temporal lobe stroke might have trouble understanding both spoken and written language (the bottleneck being semantic access), whereas a person with a VWFA lesion might only have trouble with reading (since speech bypasses visual decoding). Modern neuroimaging and lesion studies continue to refine this picture, but the consensus is that written language piggybacks on the pre-existing spoken language circuitry, introducing a specialized visual processing stage at the front end.

Contemporary Models and Mechanisms of Language Processing

In light of findings from the past five years, neuroscientists view language processing in the brain as a product of specialized networks, inter-regional connectivity, and dynamic neural coding. Several key themes characterize the current state-of-the-art understanding:

-

Distributed and Interconnected Language Network: The concept of a strictly localized “language center” has evolved into a network-based perspective. The core language regions (left IFG, STG, etc.) form a tightly interconnected network that is highly selective for language taskspubmed.ncbi.nlm.nih.gov. This network shows strong intrinsic functional connectivity (even at rest) and operates as a coherent unit during language comprehension or production. Recent large-scale fMRI studies, including precision fMRI mapping in hundreds of individuals, have yielded probabilistic atlases of this language network, confirming that while individuals vary, the left frontal and temporal language regions are strongly coupled and reliably activated by language across peoplepubmed.ncbi.nlm.nih.govnature.com. Moreover, these language-specific regions are now distinguished from neighboring domain-general regions (like the multiple-demand executive network). For example, parts of Broca’s area are language-selective, while adjacent parts handle general cognitive difficulty – careful fMRI mapping can dissociate these, refining our localization of “true” language cortexjournals.sagepub.com. Thus, the state-of-the-art is a precisely defined language network that is distinct in function and connectivity from other brain networks, rather than a vague Broca/Wernicke area definition.

-

Dual-Stream and Parallel Processing: Contemporary models embrace the idea of parallel pathways in language processing – notably the dual-stream model for speech (ventral for meaning, dorsal for phonology) and analogous dual routes for reading (direct vs. phonological)frontiersin.orgnature.com. These models explain how the brain can simultaneously process multiple aspects of language. For instance, as we listen to a sentence, we parse phonetics and predict upcoming words (dorsal stream engaging frontal cortex) even as we interpret the meaning of earlier words (ventral stream engaging temporal cortex). The two streams interact and converge on unification areas (like Broca’s area) that combine sound-to-meaning mappings into a coherent interpretation. Recent studies (using MEG, ECoG) support this with timing evidence: early activity in auditory cortex splits into parallel processing streams that later reunite during understanding. Such evidence has refined the localization of sub-functions: e.g., posterior STG (area Spt) as a sensorimotor interface, anterior STG/MTG as semantic hubs, inferior frontal as a unification sitefrontiersin.orgfrontiersin.org. The mechanism is not strictly sequential but massively parallel.

-

Time Dynamics and Predictive Coding: Advances in intracranial recording and magnetoencephalography have shed light on how the brain encodes language in time. We now appreciate that language processing is highly incremental and predictive. The brain uses predictive coding, meaning it actively anticipates upcoming words or sounds based on context, which influences neural responses. For example, the N400 effect (a well-known ERP signal for semantic processing) is smaller for expected words, indicating the brain had pre-activated that word’s representation. On the neural level, cortical oscillations in auditory regions entrain to the rhythm of speech, segmenting syllables and words. The left hemisphere, with its shorter temporal integration windows, captures rapid phonetic changes, whereas the right tracks slower fluctuations (prosody)frontiersin.org. This division of labor in time is a proposed mechanism (the “asymmetric sampling in time” model) explaining hemispheric differences. In practical terms, current models posit that neuronal populations encode language at multiple timescales – phonemes (~30 ms), syllables (~200 ms), and even multi-second phrase structures – through nested oscillatory activity. These temporal coding strategies allow efficient parsing of speech and might also apply to reading (where eye movements and fixation durations reflect chunking of text).

-

Integration with Cognitive and Social Processes: Modern research underscores that language does not work in isolation from other cognitive domains. Semantic processing, for instance, overlaps with wide networks involved in general conceptual knowledge – meaning is distributed across sensory and association cortices (e.g., understanding the word “dog” activates auditory areas for barking, visual areas for shape, etc., in addition to “language” areas). Syntactic processing likewise may draw on working memory and cognitive control networks to handle complex sentences. One hot topic has been whether syntax has a dedicated neural substrate or if it piggybacks on semantics; some 2020s studies (Fedorenko et al.) argue that there is not a completely separate “syntax module”, as the same core language regions handle both syntactic and semantic processing depending on taskresearchgate.net. Others (Friederici et al.) suggest specific pathways (like a frontal to temporal-parietal loop) preferentially handle hierarchical structure. This debate is ongoing but illustrates the trend of refining our understanding of mechanisms of language – whether distinct circuits for syntax vs. semantics, or a flexible single network that can do it all. Additionally, pragmatics and social aspects of language (like Theory of Mind in language, understanding intentions, sarcasm) recruit frontal and temporal poles and midline regions. The state-of-the-art models integrate these findings by proposing a core language network that interacts with a “semantic store” network, a social cognition network, an emotional prosody network, etc., depending on the contextfrontiersin.org. For example, Hagoort’s 2019 “multiple-network” model describes language as the combination of a core syntax/phonology network plus multiple interface networks (memory, control, emotion, etc.)researchgate.net.

-

Subcortical Contributions: Another aspect of current understanding is the role of subcortical structures (basal ganglia, thalamus, cerebellum) in language. These were historically overlooked in favor of cortical areas, but recent work highlights their importance in fluent language function. The basal ganglia (especially the striatum) are involved in language sequencing and automatization – for instance, in grammar (rule-based conjugation) and in initiating/switching speech segments. Parkinson’s disease (BG dysfunction) can produce speech timing deficits or difficulty in sentence processing. The thalamus acts as a relay that can influence language through attention and perhaps some lexical retrieval processes (thalamic strokes sometimes cause aphasia). The cerebellum, classically for motor coordination, has been implicated in modulating rhythm and flow of speech and in error correction during language (e.g., helping with predicting sensory feedback in speech, and even in higher-level prediction of words). A 2023 connectivity study in dyslexics found increased involvement of the right cerebellum in reading, suggesting a compensatory rolenature.comnature.com. All these findings align with the idea that language is an embodied, whole-brain function – subcortical loops fine-tune cortical language processing, contributing to the speed, fluidity, and automatization of language skills.

In sum, current neuroscientific models of language portray it as a widely distributed yet functionally specialized system. Localization is now understood in terms of networks (frontal-temporal-parietal circuits) rather than single gyri, and mechanisms are understood in terms of interactions (streams, feedback loops, and predictive coding) rather than simple one-way relay. This nuanced understanding is continuing to grow with methodologies like high-field fMRI, which can parse sub-millimeter functional zones, and machine-learning analyses that compare brain activity to artificial neural network models of language. Excitingly, researchers are even comparing how modern AI language models activate “semantic” or “syntactic” units to how the brain does, finding intriguing parallelsnature.com. Such interdisciplinary approaches are refining our grasp of the neural basis of language, moving us closer to answering age-old questions about how our brains turn sound and sight into the rich tapestry of human language.

Comparative Insights from Primate Studies

Human language is unique, but its neural underpinnings have evolutionary precursors in the primate brain. Studies comparing humans with non-human primates (like chimpanzees and macaques) have provided insight into which aspects of our language network are truly novel and which are built on older communication systems. One striking finding is that some brain asymmetries once thought exclusive to human language are present in other primates. For example, chimpanzees and baboons show leftward asymmetry in brain regions homologous to human language areas – notably, the planum temporale (part of the superior temporal region related to auditory processing) tends to be larger on the left in these animals, just as it is in human brainsmdpi.commdpi.com. Such structural asymmetries in non-language-trained primates raise the question of what functions they subserve; researchers hypothesize they could relate to a lateralized communication system (e.g. gestural or vocal communication biases) or other domain-general processes that predate languagemdpi.commdpi.com. Indeed, chimpanzees have been observed to prefer using one hand for gestures (often the right hand, implying left hemisphere control of communicative gestures), suggesting a primitive lateralization for communication that could be a foundation for the evolution of language later onmdpi.com.

In terms of brain regions, primates possess analogues of Broca’s and Wernicke’s areas, though they are not used for language in the human sense. The Broca’s area homologue in monkeys (part of premotor cortex, area F5) is involved in orofacial movements and is famously associated with “mirror neurons” for actions – some propose this was repurposed during human evolution for complex sequencing of speech. The temporal lobe auditory regions in monkeys and apes are finely tuned to species-specific vocalizations (e.g. monkey calls), performing analysis of vocal sounds somewhat akin to how our STG analyzes speech. For instance, macaque STG can distinguish different call types and even who is calling, indicating an ability to process meaningful sound patterns. However, what monkeys and apes lack is the full neural circuitry to map those sounds to a rich lexicon and syntax. A critical difference is the development of the arcuate fasciculus – in humans this fiber tract robustly connects temporal cortex to frontal cortex (enabling the dorsal stream for repeating and complex syntax), whereas in chimpanzees and monkeys the arcuate is much smaller or does not connect the same frontal-temporal regionsnature.compnas.org. This difference is thought to be a key anatomical change that allowed the evolution of vocal imitation and recursive language structure in humans. Comparative diffusion MRI studies have shown that only humans have a strong left lateralized arcuate fasciculus connecting to frontal lobe language areas, which correlates with our unique linguistic capacitiespmc.ncbi.nlm.nih.gov.

Additionally, while primates do not have language, they do share some cognitive building blocks: they can perceive basic sound patterns, have some concept of sequence ordering, and can intentionally communicate (gesturally or with calls to a limited extent). By studying how ape and monkey brains handle these tasks, scientists infer which neural processes are ancient. For example, both humans and monkeys show activation in the superior temporal gyrus for vocal sounds (speech or calls), indicating this auditory processing stage is conserved. Some experiments have trained apes on simple artificial grammar tasks; apes show some grasp of sequential rules but do not recruit a Broca’s-like syntax network as humans do, highlighting a gap in neural processing for hierarchy. In sum, primates demonstrate precursors to language networks: they have asymmetrical auditory regions and frontal vocal areas, and their communication engages these brain areas in limited ways, but the extensive connectivity and specialized functional tuning seen in humans (especially for syntax and open-ended vocabulary) is lacking. These comparative findings support the idea that human language circuitry was built on top of general primate auditory-motor circuits, honed by natural selection and perhaps gene-culture coevolution (FOXP2 gene is one famous example affecting speech motor learning across species). Thus, studying primates helps us appreciate which parts of our language brain are evolutionary innovations (e.g., expanded frontal-temporal loops, enhanced lateralization) versus conservations (e.g., basic auditory decoding in STG).

Conclusion

Over the last five years, our understanding of language processing in the brain has become increasingly detailed and network-oriented. We now know that language engages a left-lateralized network of frontal, temporal, and parietal regions that work together to decode and produce linguistic informationfrontiersin.orgpubmed.ncbi.nlm.nih.gov. This network is specialized – responding vigorously to language and relatively little to other cognitive taskspubmed.ncbi.nlm.nih.gov – yet it does not act alone. It interfaces with auditory and visual cortices for input, motor cortices for output, and a host of supportive systems for memory, attention, emotion, and social cognitionfrontiersin.org. Language processing is predominantly housed in the left hemisphere, reflecting strong lateralization, but the right hemisphere provides crucial complementary functions (especially for prosody and high-level context)frontiersin.orgfrontiersin.org. Both spoken and written language engage overlapping high-level mechanisms (lexical and syntactic processes in the temporal and frontal lobes) but differ in their early processing stages – spoken language routes through auditory cortex and phonological buffers, whereas written language introduces the visual word form system in the occipitotemporal cortexpmc.ncbi.nlm.nih.govfrontiersin.org. Modern research has mapped these pathways with unprecedented precision, revealing how phonemes are represented in superior temporal gyrus populations, how the brain’s rhythms align with speech, and how reading circuits integrate with speech circuitspmc.ncbi.nlm.nih.govpmc.ncbi.nlm.nih.gov.

The state-of-the-art view is that language in the brain is emergent from a network of interacting regions, rather than any single center. Localization of function is real – e.g. damage to Broca’s area versus Wernicke’s area yields different aphasic deficits, indicating those regions’ special roles – but these regions operate as hubs in a dynamic circuit. Language functions (phonology, syntax, semantics) are distributed across these hubs and their connections, and the brain flexibly recruits additional regions as needed by the linguistic task (for instance, engaging memory areas for narrative recall or engaging emotional areas for affective language)frontiersin.org. This explains why earlier imaging studies found “wide-spread activation” for language: language tasks tap into many brain systems beyond just core language regionsfrontiersin.org. It also aligns with clinical observations that recovery from aphasia often involves perilesional or right hemisphere regions compensating – the network can reconfigure to some extent.

In conclusion, current neuroscience portrays language processing as a highly lateralized, multi-component network function. Key cortical areas in the left frontal and temporal lobes form the backbone for speaking and understanding, the parietal and occipital lobes provide essential input–output interfaces (connecting sounds, symbols, and meanings), and numerous other regions contribute to the richness of real-world language use. Auditory language processing and visual language processing both ultimately activate this core network, illustrating the brain’s remarkable ability to integrate different modalities into a unified linguistic experience. Ongoing research continues to refine our knowledge – for example, using ever-more precise imaging to chart language maps, exploring the oscillatory codes that carry linguistic information, and examining how the brain’s language network develops in children and adapts in bilinguals. These efforts deepen our understanding of one of the most distinctive human capacities – our ability to communicate through language – and how this ability is implemented in the intricate circuitry of the brain.

Sources: Recent neuroscientific literature and reviews on language networks, lateralization, and language processing mechanismsfrontiersin.orgpubmed.ncbi.nlm.nih.govpmc.ncbi.nlm.nih.govpmc.ncbi.nlm.nih.govfrontiersin.orgpmc.ncbi.nlm.nih.govfrontiersin.orgnature.com, as cited throughout. The information reflects findings from fMRI, ECoG, lesion studies, and comparative research, emphasizing developments from roughly 2019–2024 in the field of the neurobiology of language.

Lina Lopes

Lina Lopes  Cerebral Sync - Week 4/13

Cerebral Sync - Week 4/13