Intro

A generation of artists has turned their nervous systems into instruments. Since the late 2000s, the democratization of EEG technology — from Emotiv’s sleek neural helmets to OpenBCI’s open-source cranial rigs — has opened the skull not to surgery, but to sculpture, performance, and code.

What follows is a timeline of artistic experiments where brainwaves leave the laboratory and enter the realm of light, sound, and fabric. From stitched thought patterns and immersive opera to neural VR and machine-generated memory ghosts, each project in this archive employs EEG (particularly Emotiv, including its 32-channel model, and OpenBCI) to translate electric whispers of the mind into aesthetic events.

Some use machine learning. Others rely on raw signal mappings. All invite us to ask:

What happens when thought becomes artifact?

Timeline of Brainwave-Based Artworks

2013–2015 — Brainwriter

Artists/Collaborators: Tempt One (Tony Quan), Daniel R. Goodwin, OpenBCI, Not Impossible Labs, Graffiti Research Lab (Zach Lieberman, Theo Watson, James Powderly) (California, London)

Device: Custom OpenBCI-based EEG headset with Olimex active electrodes and Tobii eye-tracking

Medium: Digital graffiti and interactive installations

Notes: An open-source brain-computer interface enabling a paralyzed graffiti artist to create art using brainwaves and eye movements.

Description

Brainwriter is an open-source project developed to assist Tempt One, a graffiti artist paralyzed by ALS, in creating art again. By integrating a custom EEG headset (built with OpenBCI and Olimex electrodes) and Tobii eye-tracking technology, the system translates brain signals and eye movements into digital drawings. The software, developed using OpenFrameworks, allows for point-by-point sketching, line bending, and interaction with real-world images, enabling Tempt One to produce intricate digital graffiti. The project also featured an interactive installation at the Barbican Centre in London, where participants used their brainwaves and eye movements to control a game, demonstrating the potential of brain-computer interfaces in art and communication. This initiative builds upon the earlier Eyewriter Project and emphasizes the power of open-source collaboration in assistive technology.Daniel R Goodwin. (There was no explicit use of machine learning; the project relied on signal processing and direct mappings from EEG and eye-tracking data to visual output.)

2013 — NeuroKnitting

Artists: Varvara Guljajeva & Mar Canet (Barcelona)

Device: Emotiv EPOC (14 channels)

Medium: Knitted textiles from EEG patterns

Notes: A scarf woven from the electrical activity of the brain.

Description

A project that transformed brain signals into knitwear. The artists captured the brain activity of participants listening to classical music (Bach) and converted this data into a knitted scarf pattern. Using a 14-channel EEG headset, the system measured three aspects of mental state—relaxation, excitement, and cognitive load—and after 10 minutes of listening, translated each second of brainwave data into stitch patterns var-mar.info. The result was a series of unique scarves generated from each listener’s neural activity, creating a personal piece of generative EEG art designboom.com. (There was no explicit use of machine learning; the project relied on direct mappings from Emotiv metrics to visual patterns.)

2015 — Brainlight

Artist: Laura Jade (Sydney)

Device: Emotiv EPOC / EPOC+

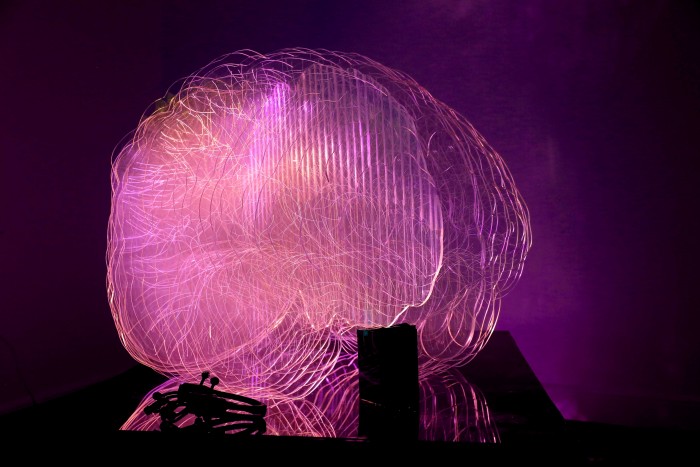

Medium: Illuminated brain sculpture

Notes: Real-time light and sound feedback based on emotional EEG data.

Description

An interactive sculpture shaped like a brain that lights up and emits sounds in response to the brain activity of the participant. Created as part of a Master’s project, the work features a large acrylic brain structure lit from within by LEDs. The installation is controlled by an Emotiv EEG headset, such that the sculpture glows according to changes in the user’s brainwaves captured wirelessly laurajade.com.au. The software monitors specific brainwave bands—such as alpha (meditation), theta (focus), and beta (excitement)—as well as detected emotional states and facial expressions, translating these into light and sound variations within the sculpture laurajade.com.au. Through this setup, the audience experiences a kind of cyber-activation of the brain, where thoughts and emotions control the artwork in real time. (There was no indication of ML use; the project employed Emotiv’s emotional detection suite and direct mappings to audio-visual feedback.)

2016 — Noor: A Brain Opera

Artist: Ellen Pearlman (Hong Kong)

Device: Emotiv EPOC+

Medium: Immersive performance opera

Notes: The performer’s emotional EEG states triggered visuals, sound, and text.

Description

Immersive performance described as the world’s first “brain opera.” In Noor, a performer positioned at the center of a 360° theater controls every element of a multimedia opera — panoramic videos, soundscapes, and even fragments of the libretto — using only her brain activity. The premiere took place at the ISEA 2016 festival in Hong Kong. Using the Emotiv EPOC+ headset, the performer’s emotional states trigger segments of video, audio, and text throughout the performance, while her brainwaves are displayed in real time for the audience emotiv.com. For example, levels of excitement or meditation detected by the EEG can trigger specific scenes or changes in the music. Noor thus explores the intersection of BCI and performing arts, where the artist’s mind becomes the interface that drives the narrative. (The piece used Emotiv’s emotional suite to map emotions to stage events, but did not involve a custom-trained ML algorithm.)

2016 — Dual Brains

Artists: Eva Lee & Aaron Trocola (New York City)

Device: OpenBCI (custom Ultracortex headset)

Medium: Audiovisual performance

Notes: EEG and ECG data from two performers generated synchronized visuals and sound in real time.

Description

Three-minute audiovisual performance featuring two performers connected through EEG. Presented at Art-a-Hack 2016 in New York City, Dual Brains featured artists Eva Lee and Aaron Trocola wearing OpenBCI headsets simultaneously to generate sound and visuals in real time. The installation captured both EEG (brainwaves) and ECG (heartbeats), translating this biodata into projected visuals and soundscapes. The result was a shared audiovisual environment created by their merged neural and cardiac signals evaleestudio.com. Over the course of the performance, brainwave patterns and heart rhythms between the two participants began to synchronize, influencing the aesthetic output — an artistic exploration of empathic connection and biofeedback between two brains. (No machine learning was used; the project relied on real-time integration of filtered EEG and ECG signals.)

2016 — Agnosis: The Lost Memories…

Artist: Fito Segrera (Paris)

Device: OpenBCI (open-source EEG headset)

Medium: Interactive installation

Notes: Drops in attention, measured via EEG, triggered AI-generated memory reconstructions.

Description

Interactive installation that explores memory loss through EEG and artificial intelligence. Colombian artist Fito Segrera developed a system in which an OpenBCI headset monitors his level of attention — and when attention drops, the system intervenes makery.info. During these lapses, a camera captures a photo of the surrounding environment. In parallel, image recognition algorithms, online tagging services, and Google’s auto-suggestion interpret both the EEG signals and the images taken, in order to generate new, associative visual content, which is compiled into a kind of visual “logbook” makery.info.

The piece, titled Agnosis: The Lost Memories…, presents viewers with a mosaic of true and false memories — real photographs juxtaposed with fabricated images constructed by algorithms based on the artist’s brain activity. In essence, it is a form of auto-generated augmented reality that questions how attention and technology shape memory. (Machine learning is central to the work: computer vision algorithms interpret EEG data indirectly and autonomously to produce new visual content, making AI not just a tool, but a co-creator in the artwork.)

2018 — Emotiv VR (Freud’s Last Hypnosis)

Artists/Project: LS2N & ESBA-TALM, in collaboration with filmmaker Marie-Laure Cazin (Angers/Nantes, France)

Device: Emotiv EPOC (14 channels)

Medium: Neuro-interactive virtual reality film

Notes: EEG-based emotional and cognitive states determined which point of view the viewer experienced within the film.

Description

A neuro-interactive VR film experience that merges immersive cinema with brain-computer interfaces to alter narrative perspective in real time. Developed by a French research group (LS2N) in collaboration with the School of Fine Arts of Tours/Angers/Le Mans (ESBA-TALM) and filmmaker Marie-Laure Cazin, the project places the viewer inside a 360° cinematic reenactment of Freud’s Last Hypnosis. While immersed in the scene, the viewer wears an Emotiv EPOC headset, enabling them to “embody” either Freud’s or the patient’s point of view, based on their mental state emotiv.com.

The EPOC’s EEG sensors capture emotional and cognitive responses in real time, enabling implicit interaction — for instance, levels of engagement or relaxation may influence which storyline branch the viewer is shown. The piece debuted at international events in 2018 (including ACM TVX in Seoul) and was later presented publicly at festivals in 2019.

(The project employs EEG-based state analysis to dynamically guide the viewer’s path, likely using Emotiv’s built-in emotional metrics rather than a custom-trained machine learning model.)

2021 — Somatic Cognition Immersive Installation

Artist/Collective: Ashley Middleton (amiddletonprojects) and team (Berlin/London)

Device: OpenBCI (supported by OpenBCI)

Medium: Immersive installation

Notes: EEG data linked to a virtual body system using real-time machine learning.

Description

Immersive installation combining EEG signals with a virtual body system to explore somatic surveillance and non-conscious cognition. Developed during a residency at Exposed Arts Projects (London, 2020–2021) and presented in EDGE Neuroart workshops in Berlin, the piece offered participants a meditative, embodied experience within a “virtual sensory system of the body” openbci.com.

The team used an OpenBCI headset to capture EEG data and close it in a sensory feedback loop — mapping it in real time to a virtual environment built in TouchDesigner. The technical system integrated platforms such as Python, BrainFlow, and the Wekinator (an interactive machine learning toolkit) to train real-time mappings between brain activity and aesthetic output openbci.com. The first exhibition was planned for September 2021 in London, demonstrating how AI techniques can translate non-conscious brain signals into artistic experience.

(Machine learning plays a key role: Wekinator was used to train models that connect EEG data to visual and sonic parameters, enabling adaptive interaction.)

2024 — The Echo Wave Project

Authors: Digital Media students, Toronto Metropolitan University (Mike Faragalla et al.)

Device: Emotiv Insight (5-channel EEG)

Medium: Interactive installation

Notes: Real-time visualization of emotional states using EEG and TouchDesigner.

Description

Interactive art installation focused on visualizing mental health through brainwave data. Presented at the Future Makers exhibition in Toronto, Echo Wave invited participants to wear an Emotiv Insight headset and see their emotional states unfold in real time. The system extracted EEG-based indicators like relaxation, stress, and excitement from the Emotiv headset derivative.ca, and mapped them through a TouchDesigner visual pipeline.

As the participant’s mental state shifted, projected particles and abstract shapes changed dynamically, following a therapeutic color palette (green for calm, blue for focus, red for tension) derivative.ca. This visual language allowed users to literally see their brainwaves materialize as abstract art, making often-invisible internal states visible. The result is a poetic and educational exploration of mental nuance, showing how accessible interactive EEG art has become in 2024.

(No trained ML models were used; the project relied on Emotiv’s emotional metrics and focused on immediacy and accessibility.)

Lina Lopes

Lina Lopes  Bioart Means What?

Bioart Means What?